The Limits of Reverse Image Search, Demonstrated with Google and Allison Brie

2 min read

Using reverse image search to detect fake profile pictures, or to identify reuse of images, is a useful tool.

But, like any tool, effective use requires a clear understanding of the limits of the tool.

Have you ever seen a user profile on social media that has a slightly blurred image caused by some "artistic" filter (like many of the filters provided by Instagram, Snapchat, or other image-centric social media sites)? Maybe it's art, maybe it's obfuscation.

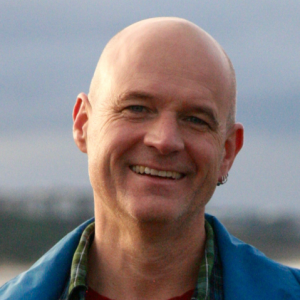

To demonstrate how reverse image search can provide mixed results, I'll start with a picture of a working actor, Allison Brie. This picture is from an interview on a late night show.

This is a closeup pulled from the image via a screengrab.

Using an unaltered closeup, the image is detected in a small number of matching pages - but the original page from IMDB is not among the matching pages.

Then, I added a glow effect to the image -- this is a common visual effect used on profile images.

This image returned no matches at all.

Conclusion

When evaluating accounts on social media sites, reverse image search is a useful tool, but be aware that it's neither precise or fully accurate. If a profile pic is a closeup and/or subtly blurred, that can be an indication that the profile image is lifted from another source and modified to avoid detection.

Two notes unrelated to misinformation work, but stil worth noting:

First, this also highlights the extreme limitations of machine learning and AI, especially across large datasets. The machines are easily fooled.

Second, Google, what is up with your generic descriptions of images? The image in question is of a woman taken when she was in her 30s. The descriptive text related to "girl" is cringeworthy. Do better.